The rapid proliferation of AI-generated content has created an unprecedented challenge: we're witnessing the emergence of closed-loop systems where artificial intelligence generates content that subsequently trains future AI models. This creates a dangerous flywheel effect that threatens the diversity, accuracy, and authenticity of AI systems. For companies building AI monitoring and analysis tools, maintaining strict neutrality isn't just an ethical consideration, it's essential for preventing the degradation of the entire AI ecosystem.

The Flywheel Problem Explained

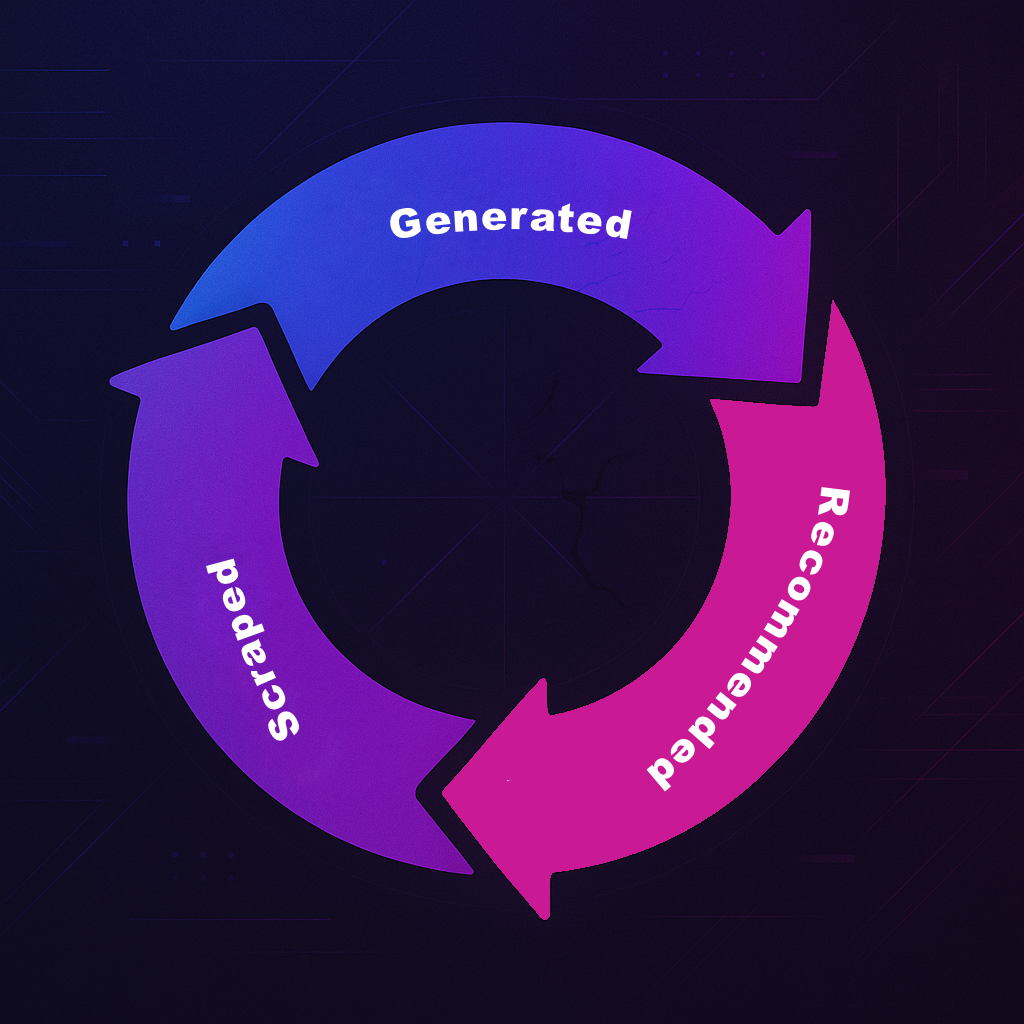

Imagine this scenario: An AI model generates marketing copy for a brand. That content gets published online. Later, when training data is scraped for the next generation of AI models, this AI-generated content becomes part of the training dataset. The new model learns from artificial content, potentially amplifying biases, reducing creativity, and creating increasingly homogenized outputs.

This isn't theoretical, it's happening now. According to Europol's Innovation Lab observatory and recent industry analysis, experts estimate that as much as 90 percent of online content may be synthetically generated by 2026. Current data suggests we're already seeing significant penetration: as of July 2025, AI content has reached 19.56% in Google search results, representing an all-time high, while studies indicate that about one in seven biomedical research abstracts published in 2024 was probably written with the help of AI, with computer science papers showing even higher rates at approximately one-fifth containing AI-generated content.

The Monitoring Tool Trap

Brand sentiment analysis tools face a particularly insidious version of this problem. Consider a typical workflow:

- A sentiment analysis tool evaluates how AI models perceive a brand

- The tool identifies areas for improvement and generates recommendations

- The brand uses that tools AI to implement these recommendations, creating new content or messaging

- This optimized content eventually becomes part of the training data for future AI models

- The cycle repeats, but now with artificially optimized inputs

The result? AI models trained on content specifically designed to game AI sentiment analysis, leading to increasingly distorted perceptions and recommendations.

The Science Behind Model Collapse

The phenomenon we're describing has a formal name in AI research: model collapse. Groundbreaking research published in Nature by Shumailov et al. demonstrates that "indiscriminate use of model-generated content in training causes irreversible defects in the resulting models, in which tails of the original content distribution disappear".

The research identifies two specific stages: early model collapse, where "the model begins losing information about the tails of the distribution, mostly affecting minority data," and late model collapse, where "the model loses a significant proportion of its performance, confusing concepts and losing most of its variance".

Recent work from NYU's Center for Data Science provides additional insight, showing that "as more synthetic data is incorporated into training datasets, the traditional scaling laws that have driven AI progress no longer hold". The implications are far-reaching: as AI-generated content proliferates online, future AI models trained on web-scraped data will inevitably encounter increasing amounts of synthetic information, potentially slowing or even halting rapid progress in the field.

Bias Amplification Through Feedback Loops

The flywheel effect doesn't just impact content quality, it systematically amplifies biases. Research on "Fairness Feedback Loops" demonstrates that when models induce distribution shifts, they "encode their mistakes, biases, and unfairnesses into the ground truth of their data ecosystem," leading to "disproportionately negative impacts on minoritized groups".

Studies from Princeton University reveal how algorithmic amplification creates filter bubbles where "as users within these bubbles interact with the confounded algorithms, they are being encouraged to behave the way the algorithm thinks they will behave". This creates what researchers call "algorithmic confounding," where user choices become restricted to increasingly extreme content, separating users into ideological echo chambers where differing viewpoints are discarded.

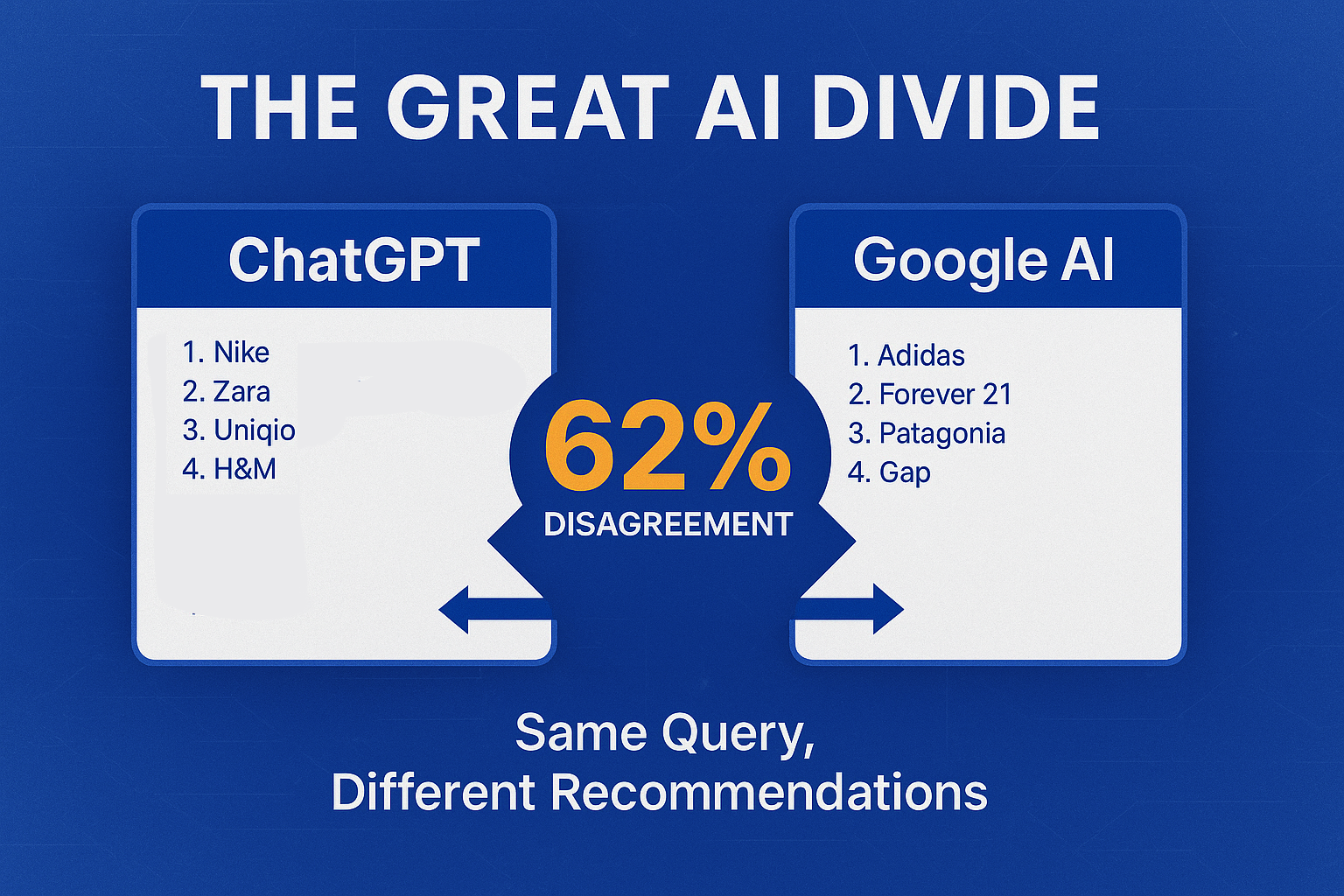

Analysis of transformer-based models shows that "classifiers trained on synthetic data increasingly favor certain labels over generations," while "generative models like Stable Diffusion show bias amplification through feature overrepresentation from training data".

Why Neutrality Matters

For AI monitoring tools to provide genuine value, they must maintain strict separation between observation and influence. This means:

Observation Only:

Tools should analyze existing sentiment and perceptions without generating content that could influence future training data.

No Synthetic Content Creation:

Recommendations should focus on strategic direction rather than providing ready-made content that brands might use verbatim.

Transparent Methodology:

The analysis process should be clearly documented and auditable to ensure it doesn't introduce artificial patterns.

Training Data Hygiene:

Tools should actively avoid contributing to the pollution of future AI training datasets.

Current State of Sentiment Analysis Tools

The sentiment analysis industry has seen explosive growth, with the global sentiment analysis software market valued at $2.1 billion in 2024 and projected to reach $6.85 billion by 2033, growing at a CAGR of 14.1%. However, a 2025 Gartner survey found that 78% of organizations consider explainability a "must-have" feature when selecting sentiment analysis tools, up from just 41% in 2022.

Current sentiment analysis tools face significant challenges, including "accurately interpreting human language, including sarcasm, irony, and contextual meaning," and the need for "real-time sentiment analysis for businesses to respond promptly to customer concerns". Modern sentiment analysis tools leverage natural language processing (NLP) to understand context behind social media posts, reviews and feedback, but the underlying algorithms and machine learning models must be carefully designed to avoid perpetuating biases.

Technical Solutions for Maintaining Separation

Implementing true neutrality requires deliberate technical choices:

Read-Only Analysis

Monitor and analyze existing brand sentiment across AI platforms without creating new content samples or examples. Focus on understanding patterns rather than generating templates.

Strategic Recommendations, Not Tactical Content

Instead of providing specific messaging that could be copy-pasted, offer strategic insights about positioning, tone, and approach that require human interpretation and creativity.

Watermarking and Attribution

If any content examples are necessary for illustration, clearly mark them as synthetic and ensure they cannot be easily harvested for training data.

Data Source Verification

Actively filter out AI-generated content from analysis datasets, implementing "diverse training data" approaches to ensure representation from various demographics, perspectives, and ideologies.

The Stakes Are Higher Than You Think

The flywheel effect doesn't just impact content quality, it threatens the fundamental utility of AI systems. When models are trained on increasingly synthetic data:

- Bias Amplification: Small biases become magnified through each generation, with "chains of generative models eventually converging to the majority and amplifying model mistakes that eventually come to dominate and degrade the data"

- Reduced Diversity: Content becomes increasingly homogenized, with research showing "consistent decrease in lexical, syntactic, and semantic diversity of model outputs through successive iterations"

- Loss of Authenticity: AI outputs lose connection to genuine human experience

- Degraded Performance: Models become less effective at understanding real-world scenarios

For brand monitoring specifically, this means sentiment analysis becomes increasingly disconnected from actual consumer perceptions, making insights less valuable over time.

Best Practices for the Industry

The AI monitoring industry needs to establish standards that prevent contribution to the flywheel problem:

1. Separation of Concerns

Maintain clear boundaries between analysis tools and content creation tools. A company might offer both services, but they should be architecturally and operationally separate.

2. Training Data Transparency

AI companies should clearly disclose what types of content are included in training datasets and actively filter out synthetic content where possible.

3. Industry Standards for Neutrality

Develop industry standards that prioritize "algorithmic transparency and accountability," with developers establishing "accountability measures and allowing external audits to help identify and rectify potential biases".

4. Human Oversight and Intervention

Maintain "human oversight" as paramount, where "human intervention can provide context, ethical considerations, and a nuanced understanding that AI may lack".

Evidence-Based Solutions

Recent research provides hope that the flywheel problem is solvable. Studies show that "AI developers can avoid degraded performance by training AI models with both real data and multiple generations of synthetic data," with this "accumulation standing in contrast with the practice of entirely replacing original data with AI-generated data".

NYU researchers have demonstrated that "using reinforcement techniques to curate high-quality synthetic data" and "employing external verifiers, such as existing metrics, separate AI models, oracles, and humans, to rank and select the best AI-generated data" can overcome performance plateaus.

The Path Forward

At Sentaiment, we've made a conscious decision to maintain strict neutrality in our analysis. Our platform observes and analyzes brand sentiment across AI models without generating content that could influence future training. We focus on strategic insights that require human creativity to implement, rather than providing tactical content that could be mechanically adopted.

This isn't just about ethics, it's about effectiveness. The most valuable insights come from understanding authentic perceptions, not perceptions shaped by previous AI recommendations.

A Call for Industry Standards

The AI ecosystem is at a critical juncture. As AI-generated content fills the Internet, it's corrupting the training data for models to come. We can continue down a path where artificial content increasingly dominates training data, leading to models that understand synthetic patterns better than human ones. Or we can establish practices that maintain the integrity of AI training while still providing valuable insights.

The choice we make now will determine whether future AI systems become increasingly sophisticated tools for understanding human experience, or elaborate mirrors reflecting their own artificial patterns.

The flywheel is spinning. The question is: will we feed it, or will we build the brakes?

The future of AI depends on maintaining the distinction between observation and influence. As builders of AI monitoring tools, we have both the opportunity and the responsibility to ensure our systems enhance rather than distort the AI ecosystem.

References

- Shumailov, I., Shumaylov, Z., Zhao, Y. et al. AI models collapse when trained on recursively generated data. Nature 631, 755–759 (2024). https://doi.org/10.1038/s41586-024-07566-y

- OODAloop. (2024). "By 2026, Online Content Generated by Non-humans Will Vastly Outnumber Human Generated Content." https://oodaloop.com/analysis/archive/if-90-of-online-content-will-be-ai-generated-by-2026-we-forecast-a-deeply-human-anti-content-movement-in-response/

- Quidgest. (2024). "90% of Online Content Created by Generative AI by 2025." https://quidgest.com/en/blog-en/generative-ai-by-2025/

- Originality.AI. (2025). "Amount of AI Content in Google Search Results - Ongoing Study." https://originality.ai/ai-content-in-google-search-results

- Liang, W. et al. One-fifth of computer science papers may include AI content. Science, DOI: 10.1126/science.adt8027 (2025).

- NYU Center for Data Science. (2024). "Overcoming the AI Data Crisis: A New Solution to Model Collapse." Medium. https://nyudatascience.medium.com/overcoming-the-ai-data-crisis-a-new-solution-to-model-collapse-ddc5b382e182

- Wyllie, S., Shumailov, I., & Papernot, N. (2024). Fairness Feedback Loops: Training on Synthetic Data Amplifies Bias. Proceedings of the 2024 ACM Conference on Fairness, Accountability, and Transparency.

- Chaney, A., Stewart, B., & Engelhardt, B. (2025). "Feedback loops and echo chambers: How algorithms amplify viewpoints." The Conversation. https://theconversation.com/feedback-loops-and-echo-chambers-how-algorithms-amplify-viewpoints-107935

- Xu, H. et al. (2025). "Bias Amplification: Large Language Models as Increasingly Biased Media." arXiv preprint arXiv:2410.15234.

- Business Research Insights. (2024). "Sentiment Analysis: A Comprehensive, Data-Backed Guide For 2025." https://penfriend.ai/blog/sentiment-analysis

- VisionEdge Marketing. (2025). "4 Practical Tips to Avoid Confirmation Bias with AI." https://visionedgemarketing.com/avoid-ai-confirmation-bias-4-practical-tips/

- Scientific American. (2024). "AI-Generated Data Can Poison Future AI Models." https://www.scientificamerican.com/article/ai-generated-data-can-poison-future-ai-models/