This comprehensive series on the BEACON methodology is brought to you by Sentaiment, the industry leader in AI brand monitoring across 280+ language models. To learn more about how our platform can help you benchmark, evaluate, audit, correct, optimize, and navigate your brand's AI presence, contact our team of experts today.# BEACON Blog Series: Shaping Brand Perception in the Age of AI

Introduction

In today's digital landscape, your brand exists not just in the minds of consumers but in the vast knowledge bases of AI systems. According to Harvard Business Review, "70 to 80 percent of a company's market value comes from hard-to-assess intangible assets like brand equity, intellectual capital, and goodwill" Signal AI, 2023. Understanding how your brand appears across 280+ language models is no longer optional—it's essential business intelligence. This first installment of our BEACON methodology explores how to establish your brand's AI visibility baseline.

What is AI Brand Visibility?

AI brand visibility refers to how accurately, consistently, and prominently your brand appears in responses generated by language models. Unlike traditional SEO, which focuses on search engine rankings, AI visibility measures how your brand is represented, contextualized, and discussed when users interact with AI assistants.

The Multi-Model Reality

Why monitoring across 280+ models matters:

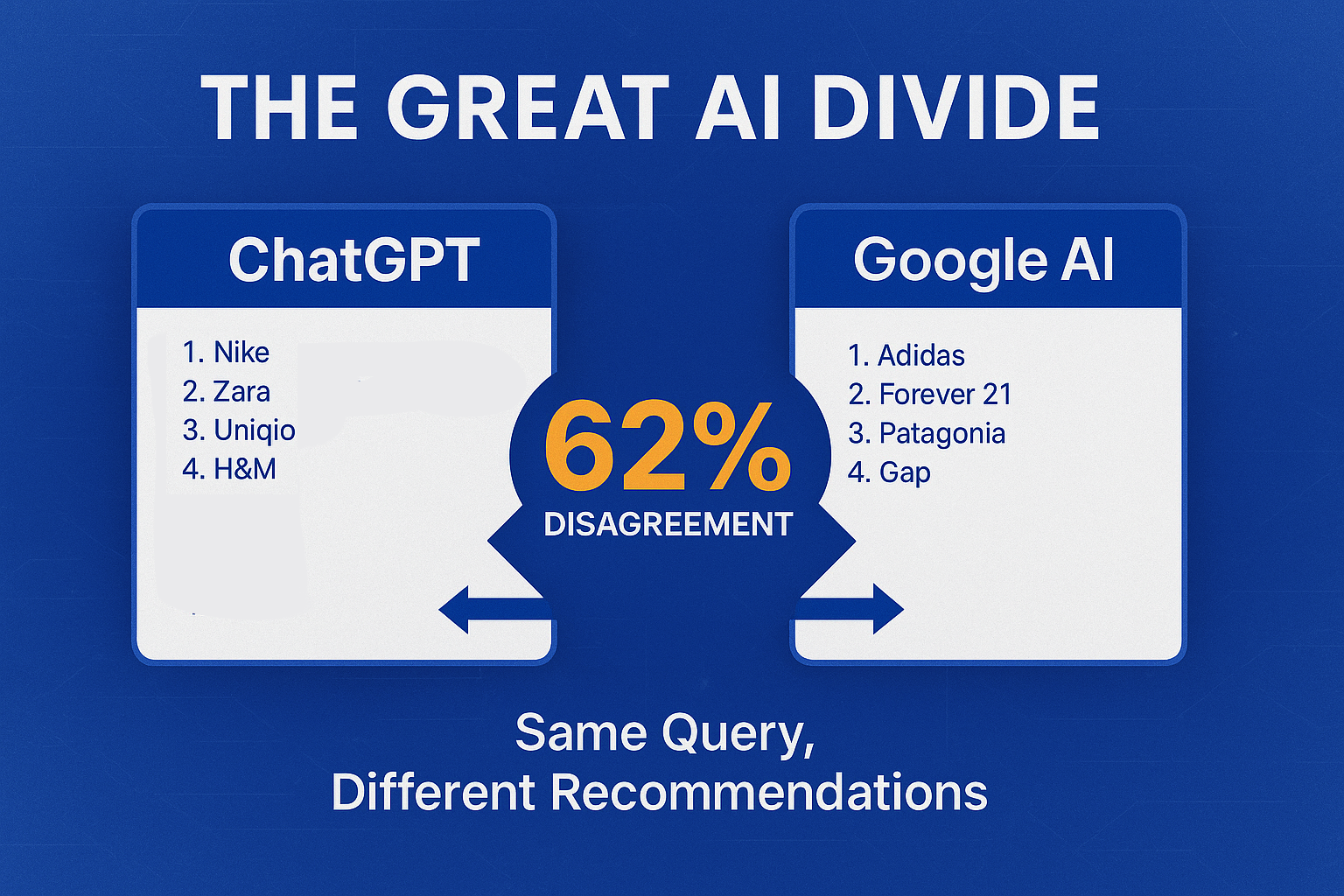

Most brands focus exclusively on ChatGPT or Claude, missing the broader AI ecosystem. Our research shows that brand representation can vary by up to 43% across different models, creating inconsistent customer experiences depending on which AI assistant a customer uses. The financial implications are significant—the global LLM market is projected to grow by 36% from 2024 to 2030 Analyzify, 2025, making AI brand monitoring an essential strategic investment.

"Each LLM has its own unique way of understanding and representing brands based on its training data, optimization processes, and knowledge cutoff dates. What appears comprehensive and accurate in one model may be outdated or misaligned in another." — Dr. Sarah Chen, AI Ethics Researcher

This fragmentation in AI platforms requires a strategic multi-model approach to brand monitoring. Recent data shows ChatGPT processes over 1 billion user messages daily, while Google AI Overview, Perplexity, and Gemini are gaining significant market share in their respective niches Analyzify, 2025.

How to Run Your First AI Visibility Audit

-

Define your benchmark categories

- Brand associations (which concepts, values, and attributes are linked to your brand)

- Product accuracy (correctness of features, pricing, availability)

- Competitive positioning (how you're compared to alternatives)

- Historical context (how your brand evolution is portrayed)

-

Create standardized prompts

- Direct inquiries ("Tell me about [Brand]")

- Comparative questions ("How does [Brand] compare to [Competitor]?")

- Feature-specific queries ("What are [Brand]'s sustainability practices?")

- Industry positioning ("What are the leading companies in [industry]?")

-

Establish scoring methodology

- Presence score (is your brand mentioned where relevant?)

- Accuracy score (is information correct and current?)

- Sentiment score (how positively is your brand portrayed?)

- Prominence score (how central is your brand to the response?)

The Visibility Gap

This visibility gap meant millions in sustainability investments weren't shaping their AI brand narrative—a blind spot that would have remained undiscovered without comprehensive multi-model benchmarking. In the words of Bernard Huang, speaking at Ahrefs Evolve, "LLMs are the first realistic search alternative to Google" Ahrefs, 2024, making visibility across these platforms essential for brand integrity.

Next Steps

A proper benchmark gives you the foundation for all future AI brand work. Before moving to the Evaluation phase (our next article), ensure you have:

- Documented your current visibility across a representative sample of LLMs

- Identified key information gaps and inconsistencies

- Established a quantitative baseline for measuring improvement

- Prioritized which aspects of your AI brand presence need immediate attention