Blog > Sentaiment

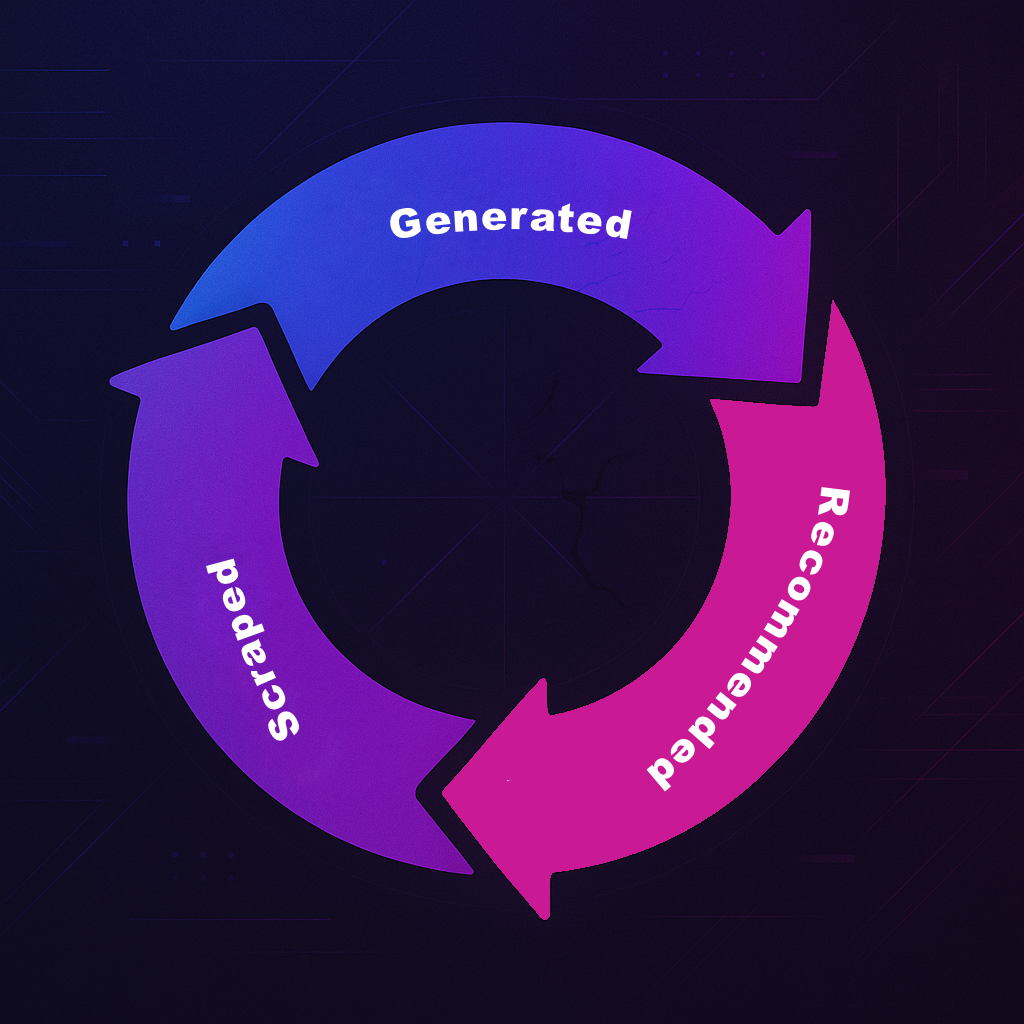

The AI Content Flywheel: Why Monitoring Tools Must Stay Neutral

Discover how AI-generated content creates dangerous feedback loops in training data. Learn why sentiment analysis tools must maintain strict neutrality to prevent model collapse and bias amplification.

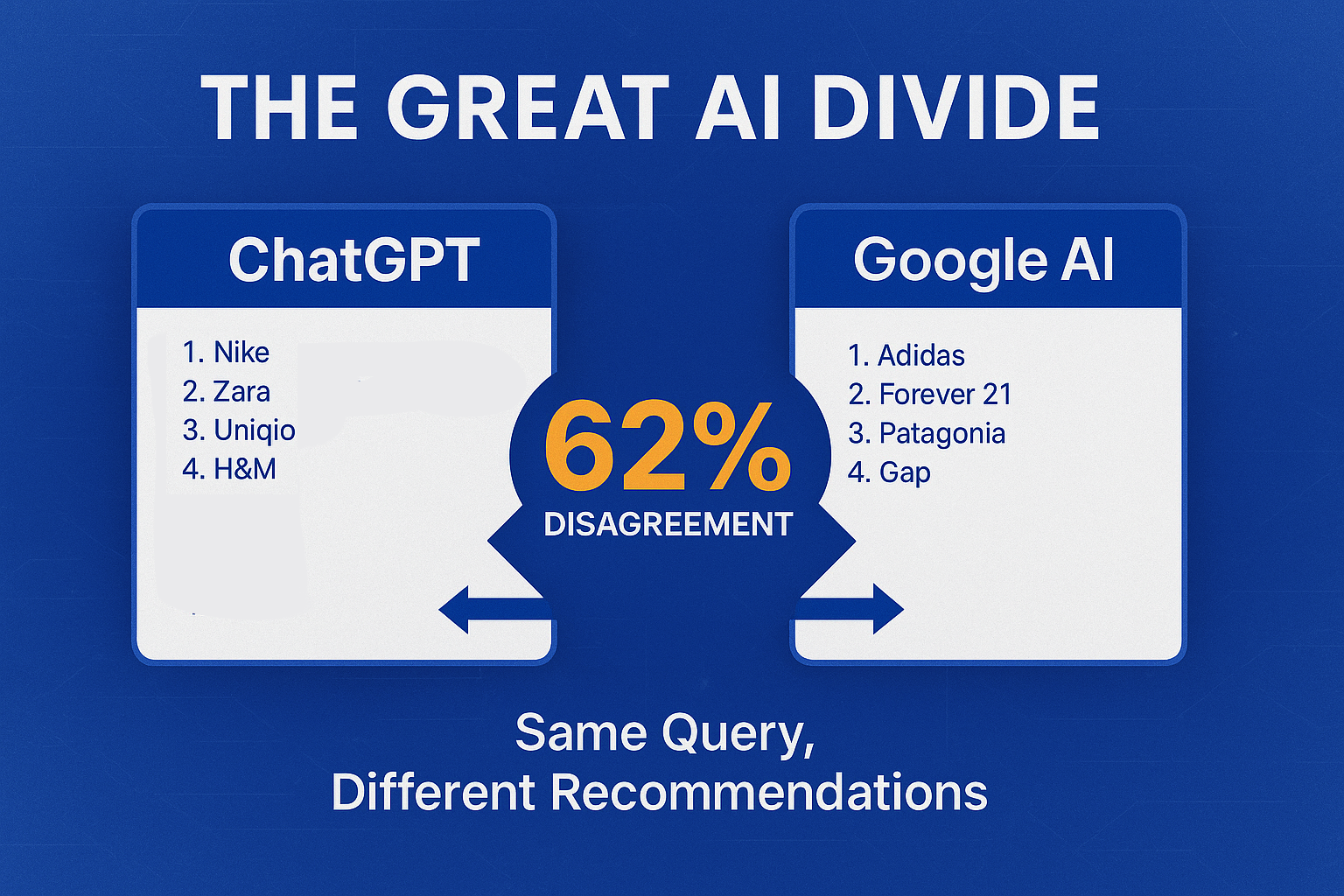

SEO was about Google. Now it's about ChatGPT.

The discovery revolution that created SEO is happening again with AI. Learn why systematic AI optimization is the new competitive advantage.

How to Check Your Brand in ChatGPT: 2-Minute Assessment Guide

Learn how to assess your brand's representation in ChatGPT with this simple 2-minute process. Discover what AI says about your company and competitors.

The Problems with Generative Engine Optimization: What GEO Tools Won't Tell You

Industry exposé: Why most LLM brand monitoring companies use flawed synthetic testing and cannot influence AI responses as promised. Academic research reveals the truth about GEO limitations.

The Scary Truth: AI Already Rewrites Your Brand Story Daily

66% of Gen Z asks AI for brand recommendations, but most agencies have zero visibility into how AI perceives their clients' brands. New research reveals AI creates negative brand halos & shapes buying decisions daily. Are you monitoring your clients' AI presence?

Risk Assessment Framework for AI in Marketing Agencies

A major sportswear brand recently launched an AI-generated campaign that claimed their shoes were "scientifically proven to increase vertical jump by 40%"—a complete fabrication that triggered FTC scrutiny and a $2.5 million settlement. This costly hallucination demonstrates why marketing agencies need robust AI risk controls. With over 50% of online queries projected to involve LLMs by 2025, the need for precise AI risk controls has never been greater. Marketing agencies face a critical challenge: managing the risks of AI tools while capturing their benefits. AI hallucinations—outputs that deviate from reality—can damage campaign performance and client reputation. In this article, we'll walk through five key steps: Risk Identification, Risk Analysis, Risk Evaluation, Risk Mitigation, and Monitoring & Continuous Improvement.

AI Hallucinations: How False Facts Can Damage Brand Reputation

When Google Bard confidently claimed the James Webb Space Telescope took the first image of an exoplanet during a promotional video in February 2023, it wasn't just wrong—it triggered a $100 billion drop in Alphabet's market value. This single AI hallucination demonstrated how artificial intelligence can damage brand reputation in seconds. Today, as AI systems become the go-to information source for millions, these confident fabrications pose a growing threat to your brand's carefully crafted narrative. Our research projects that over 50% of online queries will involve LLMs by 2025. Let's examine what hallucinations are, how they spread, and what you can do to protect your brand.

Perception Analysis vs Sentiment Analysis: The New PR Standard

Traditional sentiment analysis only captures surface reactions, while perception analysis reveals how audiences truly understand your brand through context, narrative framing, and competitive positioning. Discover why PR professionals need both metrics in today's AI-driven landscape where over 50% of online queries will soon involve language models.

Control Your AI Narrative Now

Limited early access spots for our brand monitoring platform.